CallSense

Exploring Context-Aware Post-Call Memory Support in iOS

Role: Product Manager (Speculative / Exploratory)

Focus: Early opportunity discovery · System UX · Trust & privacy · Validation strategy

The Problem

Important commitments are frequently made during phone calls — dates, follow-ups, reference numbers, links, next steps — yet iOS provides limited support for reliably capturing them.

In practice, users compensate through fragile workarounds:

Relying on memory

Writing things down in Notes, on paper, or in spreadsheets

Asking others to repeat or text details

Performing verbal “read-backs” to avoid mistakes

This increases cognitive load and creates risk at the moment responsibility transitions from conversation to action.

Before designing a solution, I looked for evidence that this was a real, recurring behavioral gap, not a personal annoyance.

Real-World Behavioral Precedent

AI-powered meeting summaries have already seen strong real-world adoption. Zoom reports that its AI Companion has been enabled by 700,000+ customer accounts, with usage of AI-generated meeting summaries doubling quarter-over-quarter following launch. In user surveys, many AI feature users report saving 30+ minutes per day through automated summaries and insights.

While these tools focus on scheduled meetings rather than phone calls, they demonstrate a broader pattern:

people actively adopt tools that help them capture, recall, and act on structured information from conversations.

This suggested the underlying problem — conversational handoff and follow-through — already exists at scale.

Early User Behavior Interviews

To ground the concept in lived behavior, I spoke with professionals across different roles about how they handle important information discussed on phone calls.

Across interviews, several consistent patterns emerged:

Calls create cognitive and emotional load, often followed by a brief decompression or reflection period

Forgetting action items or details is common, even among highly organized users

Every participant described manual capture behaviors (Notes, paper, calendars, spreadsheets, or verbal read-backs)

Writing things down — lists, links, exact details — is consistently described as more reliable than memory

Capture often fails due to context, not intent (e.g., driving, walking, hands occupied)

Several participants noted that when information is detailed or handed off for follow-up, they frequently ask others to send written confirmation, links, or exact lists to avoid mistakes.

Directional synthesis:

~10 out of 12 participants described forgetting or nearly forgetting call details

100% described some form of manual post-call capture

A majority cited timing and physical context, not motivation, as the primary source of friction

Key insight:

This is not a memory problem. It is a handoff problem - moving responsibility from conversation to a system of record.

Why a Prototype

Given the system-level nature of the opportunity, I chose to prototype early rather than jump directly to large-scale research or implementation.

The prototype was used to reason about:

Whether a post-call moment feels helpful or intrusive

How much control users expect

Where trust and privacy boundaries emerge

How this could integrate naturally with existing iOS surfaces

The goal was not to validate demand at scale, but to test the shape of the interaction and evaluate whether it aligned with Apple’s principles of restraint, transparency, and user control.

The Concept: CallSense

CallSense explores a privacy-first, post-call experience that helps users capture important information discussed during a phone call — without recording calls or acting without consent.

Rather than summarizing entire conversations, the system focuses on lightweight, actionable signals:

Tasks

Dates and times

Identifiers

Links or references

These are surfaced after the call and require explicit user confirmation or dismissal.

Design principles

User-initiated or explicitly approved

On-device and privacy-forward

Focused on intent, not transcription

Integrated with existing apps like Reminders and Notes

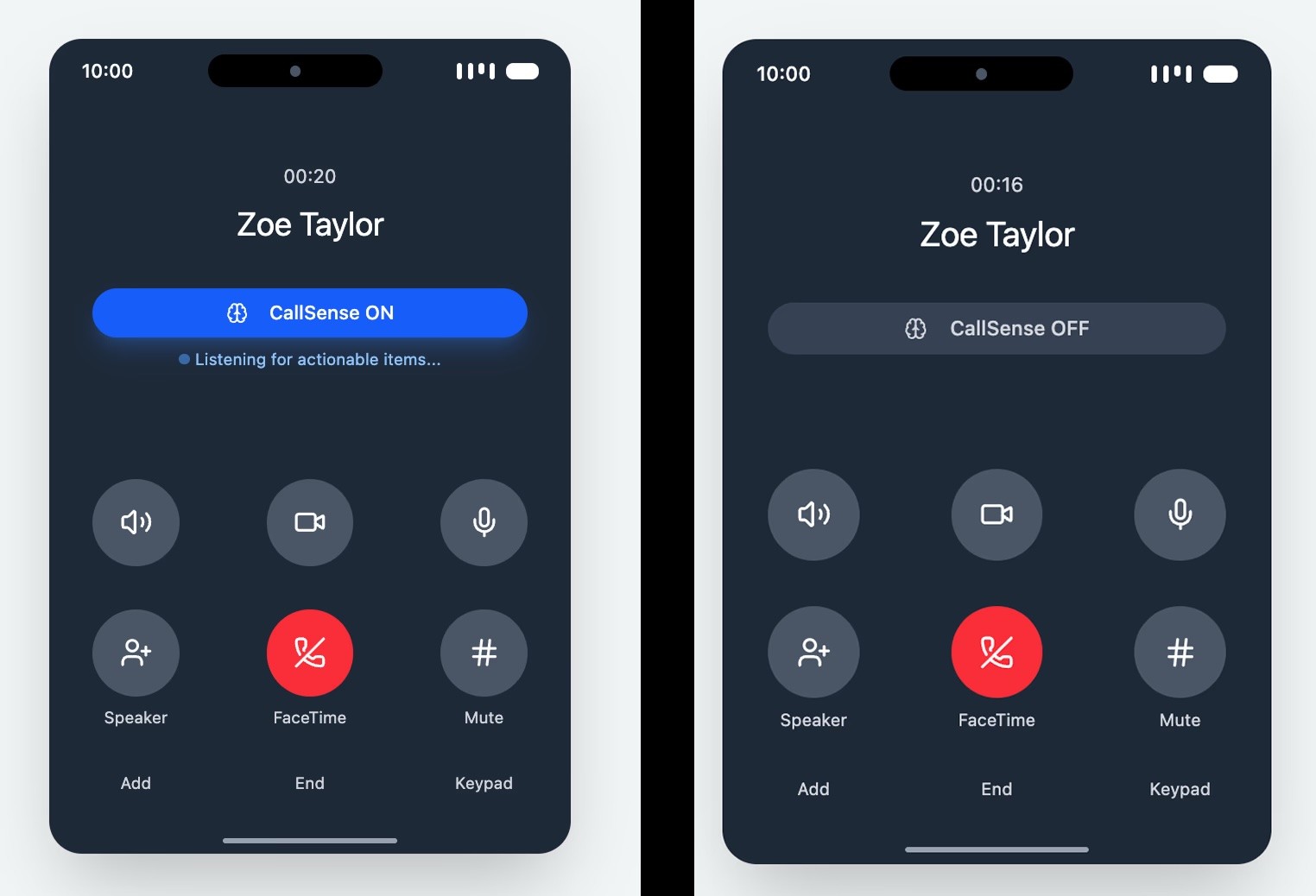

Privacy-Forward ON / OFF States

Clear, explicit control over whether CallSense is active

No hidden automation

Language that emphasizes review and approval, not recording

Context-Aware Assistance

Interview insights consistently showed that capture breaks down when users are moving, driving, or physically occupied, even when the information is important.

Because the device can already infer motion state, CallSense could adapt its behavior based on context.

Rather than always intervening, motion becomes a confidence signal:

If the user was in motion during a call, the system is more likely to suggest a post-call review

If the user was stationary and able to capture manually, the system can remain silent

Critically, this behavior would remain fully user-controlled, with options such as:

Suggest CallSense only when I’m moving

Suggest after all calls

Never suggest automatically

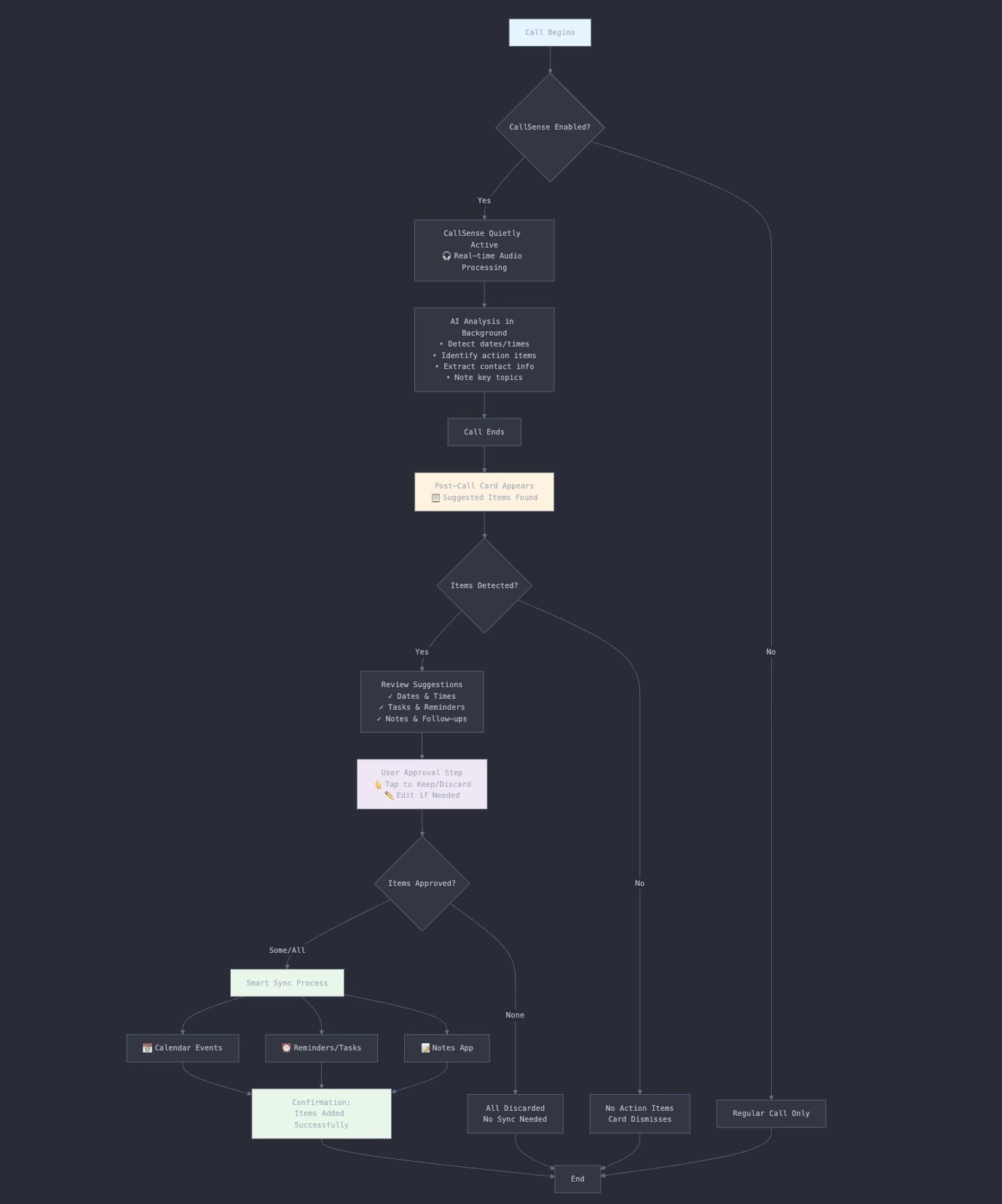

System Flow & Interaction Shape

At a high level, CallSense operates as a lightweight post-call review loop:

Call ends

Potential signals detected (on-device)

Post-call review sheet surfaces

User confirms, edits, or dismisses suggestions

Approved items sync to existing apps

No action is taken without approval. Dismissal is always a first-class outcome.

End-to-End System Flow

Explicit dismissal paths

No-action as a valid outcome

Integration with Reminders / Notes

No transcript storage

What I’d Do Next

If this concept were under active exploration at Apple, the next step would not be a full AI rollout. It would be incremental validation designed to de-risk trust and usefulness.

Step 1: Validate the Post-Call Moment (No AI)

Ship a lightweight post-call review sheet (internal or beta) with manual actions only:

Add a reminder

Save a note

Save contact details

Success signal: repeated use

Failure signal: dismissal or opt-out

Step 2: Introduce Suggestions, Not Automation

If the moment proves valuable, introduce suggestive intelligence:

“A time was mentioned — add a reminder?”

“A reference was shared — save a note?”

All actions remain explicit, on-device, and user-approved.

Step 3: Measure What Matters

Primary signals:

% of calls with at least one saved action

Follow-through completion rate

User-reported reduction in forgotten commitments

Negative signals:

Trust erosion

Perceived intrusiveness

Increased opt-out

Step 4: Decide to Scale or Kill

CallSense would only justify broader development if it:

Solves a frequent, meaningful problem

Integrates naturally into iOS workflows

Preserves user trust and control

Otherwise, it should be paused or killed, even if the technology works.

Takeaway

This project explores how a system-level feature earns its way into iOS, not through novelty, but through evidence, restraint, and responsible validation.